Quick Links

AWS Lambda functions are a serverless solution for running code in the cloud without setting up your own servers. The primary downside is that initialization times can be high, leading to increased latency. With Provisioned Concurrency, you can solve this issue.

What Is Provisioned Concurrency?

Lambda functions run in their own "execution environments," which are usually started up automatically when a request is made. After a function invocation, the environment will be kept "warm" for around 5-15 minutes, and the initialization code won't have to run again.

However, after that period, or if multiple requests need to be served simultaneously, a new execution environment needs to be spun up, leading to increased startup times called a "cold start." This can be especially pronounced with languages that need to do large amounts of JIT compilation at startup, such as Java and .NET.

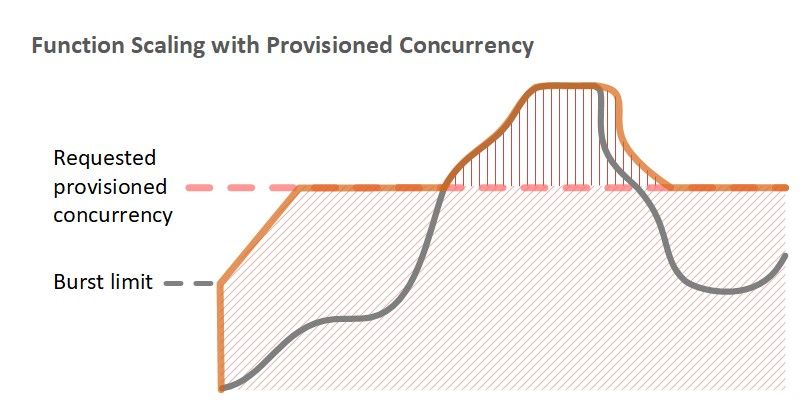

To solve this, AWS has a feature called Provisioned Concurrency, where you can essentially reserve a certain number of execution environments to be permanently warm throughout the day. This means all the initialization code is run in advance, and you don't have to experience cold starts.

If you're relying on Lambda to serve user-facing requests, you might want to consider provisioned concurrency even if it ends up costing a bit more. Even though cold starts usually only account for 1% of requests, those 1% can be seconds of more spent waiting for an application to load---though this will depend on the runtime and size of your code.

How Much Does Provisioned Concurrency Cost?

Unlike Reserved EC2 Instances, provisioned concurrency is still mostly "pay for what you use" pricing, like the rest of Lambda. You pay a small fee for each hour you provision each environment, then pay for Lambda requests like normal.

However, because the traffic is more predictable on AWS's end, and it's cheaper to not have to be running initialization code all the time, the per-function compute cost for requests made with provisioned concurrency is actually lower. There's no downside for going over the limit either---you'll just be charged standard concurrency pricing.

Overall, provisioned concurrency can be slightly cheaper (around 5-10%) if you have very predictable traffic and reserve exactly that much capacity. However, it can also be a bit more expensive in some cases. You'll want to check your analytics and plug them into AWS's Lambda Pricing Calculator to learn more.

Enabling Provisioned Concurrency

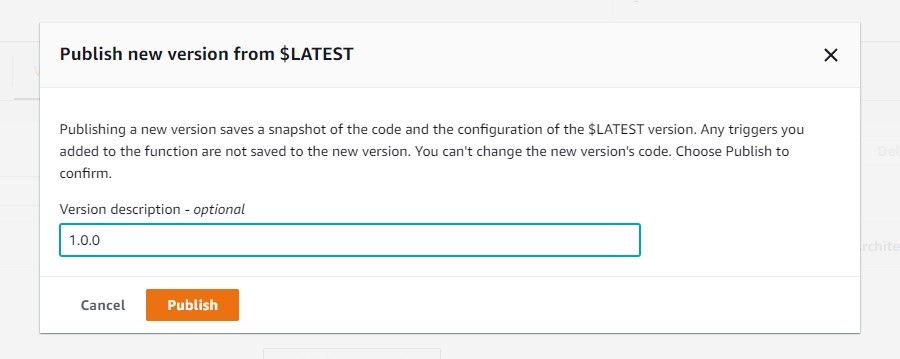

Turning on provisioned concurrency is pretty simple, but it has one downside---it can't point to the default $LATEST version. This tag is an alias that can change and doesn't point to a specific version, and provisioned concurrency needs to reserve a specific version. So, you'll need to publish a new version from Lambda, if you don't already have one:

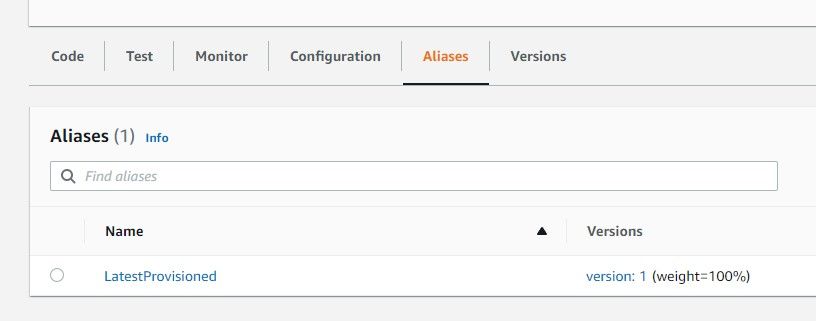

Then, configure an alias to point to that version. This alias can be updated, which will trigger an update for the provisioned environments.

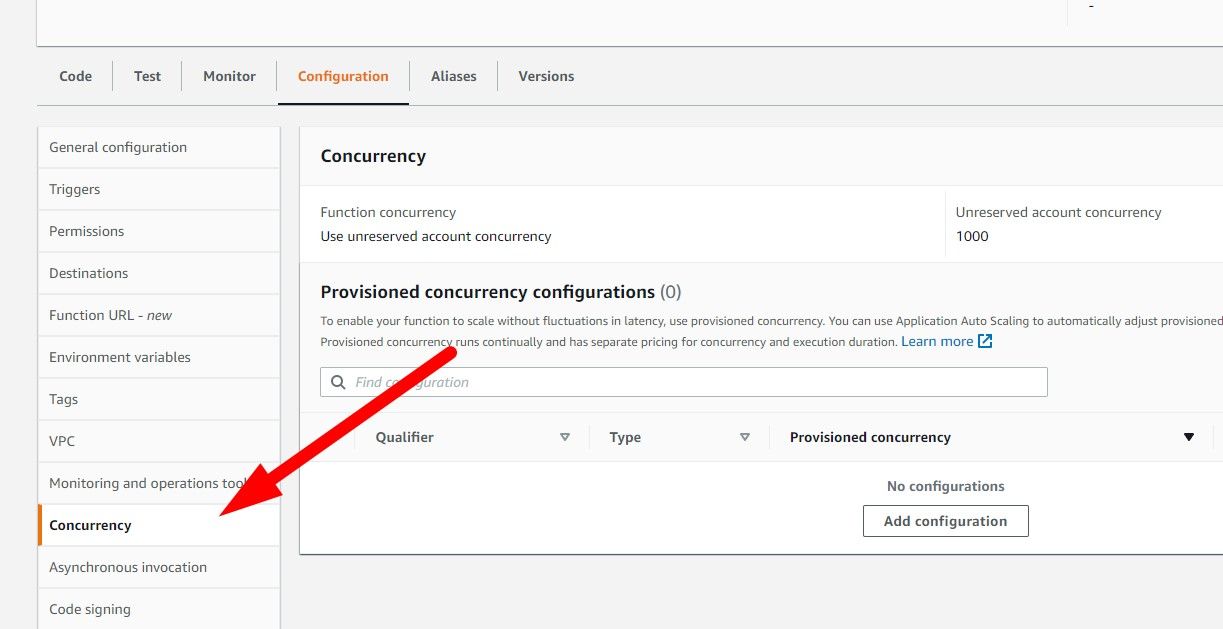

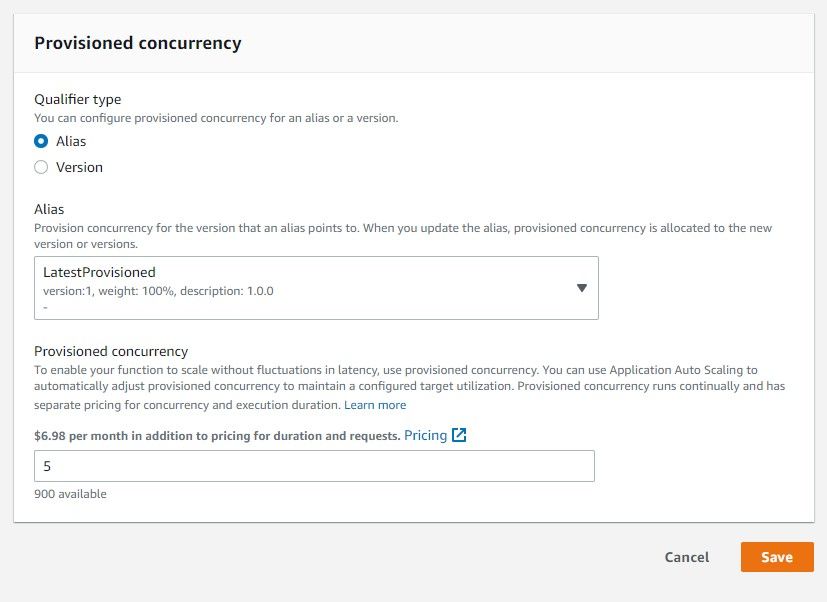

Once your alias is set up, you can add a new concurrency configuration from the Lambda's settings, under Configuration > Concurrency. You can also configure this directly from the alias settings.

The settings for provisioned concurrency are simple---select an alias, and enter an amount to provision.

You can also set and update this value using the AWS API or CLI, which can be used to automate it throughout the day:

aws lambda put-provisioned-concurrency-config --function-name MyFunction \--qualifier LatestProvisioned --provisioned-concurrent-executions 10

Autoscaling With Provisioned Concurrency

Since provisioned concurrency can be adjusted throughout the day, it can also be hooked up to AWS's Application Auto Scaling to adjust it based on usage. Hooking this up is simple and only takes a few commands from the AWS CLI or API, since there's no management console for this yet.

First, you'll need to register the Lambda function as a scaling target. Here, you'll need to edit the name of the function (MyFunction) and the alias (LatestProvisioned), and also adjust the min and max capacity ranges.

aws application-autoscaling register-scalable-target --service-namespace lambda \--resource-id function:MyFunction:LatestProvisioned --min-capacity 2 --max-capacity 10 \

--scalable-dimension lambda:function:ProvisionedConcurrency

Then, you can enable an auto scaling policy, using the function name and alias as the resource ID, and configuring it with a JSON scaling policy. This example sets it to scale up and down when LambdaProvisionedConcurrencyUtilization goes above or below 70%.

aws application-autoscaling put-scaling-policy --service-namespace lambda \--scalable-dimension lambda:function:ProvisionedConcurrency --resource-id function:MyFunction:LatestProvisioned \

--policy-name my-policy --policy-type TargetTrackingScaling \

--target-tracking-scaling-policy-configuration '{ "TargetValue": 0.7, "PredefinedMetricSpecification": { "PredefinedMetricType": "LambdaProvisionedConcurrencyUtilization" }}'

This doesn't catch all cases, such as quick bursts of usage that don't last long, but it works well for consistent traffic, and will save you money in the evening when traffic is low.