Lambda functions are a crucial part of any serverless deployment on Amazon Web Services. However, they aren't magic, and can come with a few downsides, like cold starts, due to the physical limitations of the hardware. Provisioned Concurrency can help alleviate the problem.

What Are "Cold Starts"?

"Cold Starts" are a big issue for Lambda, especially when considering it for a latency-sensitive application. The term refers to the startup time required to get a Lambda function's execution environment up and running from scratch.

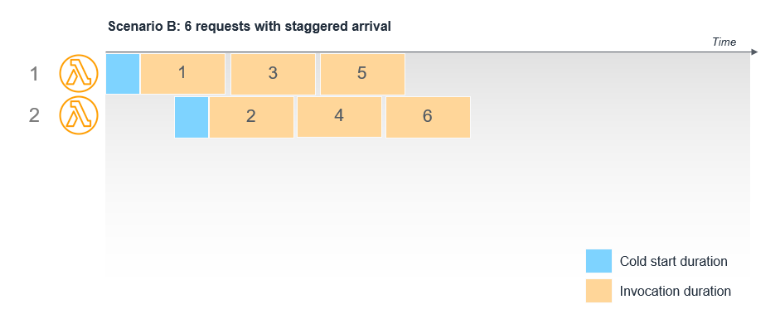

Lambda functions will be kept "warm" for a while after invoking them. Non-VPC functions will be warm for 5 minutes, and VPC functions will be warm for 15 minutes. During this period, if the function is called again, it will respond immediately. This is great for services that experience consistent, regular traffic.

However, if your code hasn't run in a while, or if it needs to scale up and run multiple concurrent functions, it will be started from scratch. According to analysis from AWS, cold starts occur in under 1% of requests in production workloads, which is acceptable for many scenarios.

However, depending on the runtime you use (Java and .NET both take a while to JIT compile), a cold start could delay function invocation by multiple seconds. This can be unacceptable for latency-sensitive applications.

No More Cold Starts

Lambda's Provisioned Concurrency mode can help solve this problem. You can think of it like reserved instances for Lambda functions---you are essentially reserving a certain amount of capacity, and a Lambda function will be kept warm for the whole period.

This has major benefits, including the nearly complete removal of startup costs. You actually won't have to worry about initialization code optimization at all, since it will run once and stay running. This is a major benefit for JIT compiled languages like Java and C#/.NET, which can have large binaries and startup times to load them.

Compared to the prior example, where functions are started cold, provisioned concurrency starts them all in advance and keeps them warm. When an invocation is needed, Lambda will use the warm functions to execute it.

However, it does come with its own drawbacks. Due to how Lambda selects versions for functions, provisioned concurrency does not work with the $LATEST tag. You will need to make a new alias, provision concurrency for that alias, and then update it when the version changes.

It's also important to understand that despite making the function run for long periods of time, provisioned concurrency does not make your application stateful. Lambda functions can and will be destroyed, and you should not treat them like an EC2 server.

How Much Does Provisioned Concurrency Cost?

The answer to this depends on how often your function is executed, and how often you see multiple execution environments being created to meet parallel demand.

The primary number to worry about for provisioned concurrency is the number of function executions running at the same time. For example, if you have a function that is called ten times per second, and each call lasts 500ms, that function will have on average 5 concurrent executions per second.

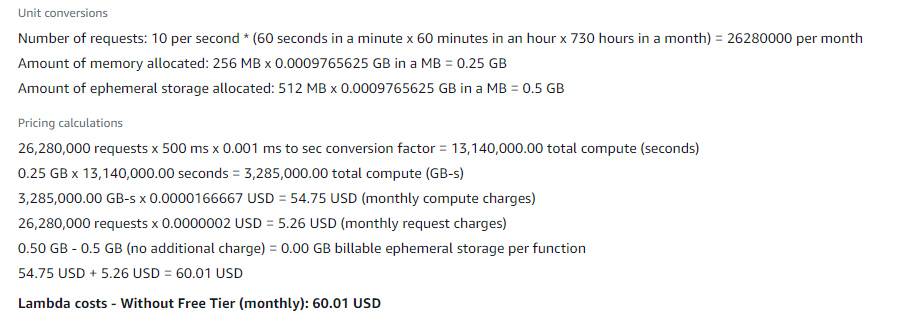

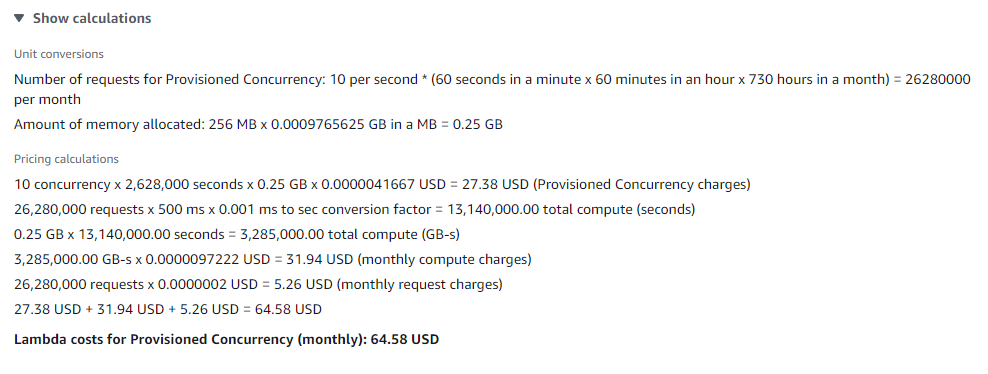

Overall, provisioned concurrency doesn't cost much more than regular Lambda functions. You can use AWS's Pricing Calculator to figure out how much it will cost you personally. For example, a Lambda called 10 times per second, with a 500ms invocation time and 256mb of memory will cost $60 a month to run.

However, that same function, but with 10 provisioned executions, comes out to a little bit more at $64.50 per month. Overall, it's likely a small 5-10% increase in cost, depending on usage.

However, provisioned concurrency is actually cheaper per GB-second of usage. This means that if you're consistently running very close to 100% usage, it can be cheaper to reserve concurrency than using regular Lambda pricing. This largely comes down to the fact that it is overall cheaper to reduce the amount of time Lambdas spend in initialization code.