Quick Links

Graphics are the sexiest component of all, and we've enjoyed decades of groundbreaking cards that have steered the industry one way or another. So for your consideration, here are the top ten cards we consider the most influential to date.

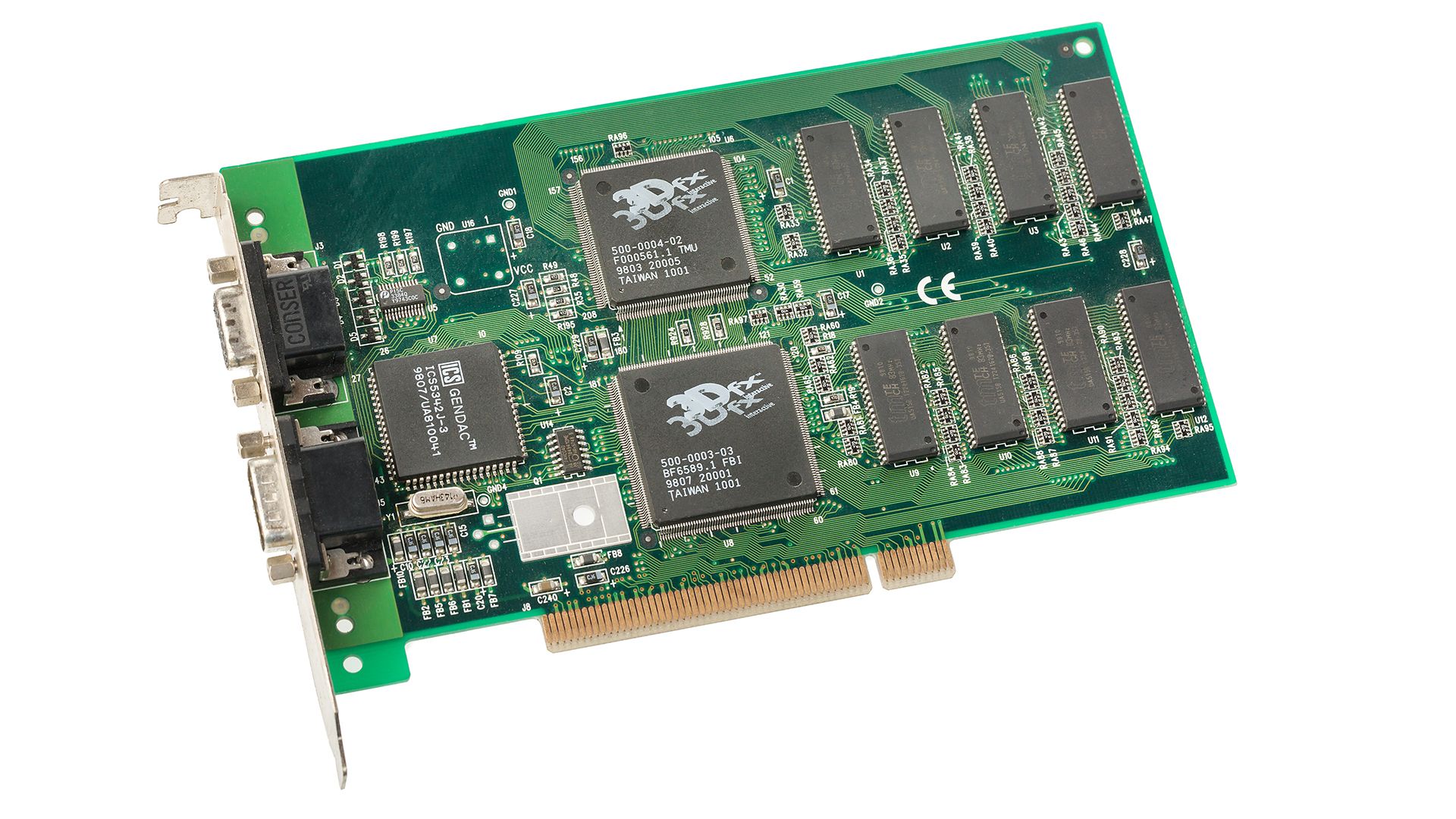

3dFX Voodoo (1996)

The 3dFX Voodoo was born in an era when the concept of 3D acceleration in consumer devices was little more than a pipe dream. It singlehandedly brought 3D-accelerated gaming from the fringes to the mainstream. With only 4MB of memory---a figure that seems almost laughable by today's standards---the Voodoo was still able to facilitate an unprecedented leap in visual performance and gaming realism.

Texture mapping and z-buffering, features that are now taken for granted, were introduced to the gaming world through the Voodoo. While the uber-popular Sony Playstation offered players wobbly polygons thanks to low precision for its depth coordinates (the "Z" coordinates), the Voodoo offered rock-solid geometry, and texture detail that made gaming consoles instantly feel obsolete.

Cards such as the S3 Virge and Matrox Mystique may technically have had these features first, but thanks to 3dFX's GLIDE graphical API and highly performant hardware, they could be now more than bullet points on a feature list and actually make a difference in real games.

I never had an original Voodoo graphics card, but I did have a Voodoo 3 2000, one of the last successful card generations the company made, and it was probably the biggest leap in graphics technology I have ever personally experienced. The only thing I remember being an issue with my last (and only) Voodoo card was a curious lack of 32-bit color support.

The effect of 3dFX is still felt to this day, as the company's IP was snapped up by NVIDIA, and is in the DNA of every NVIDIA card.

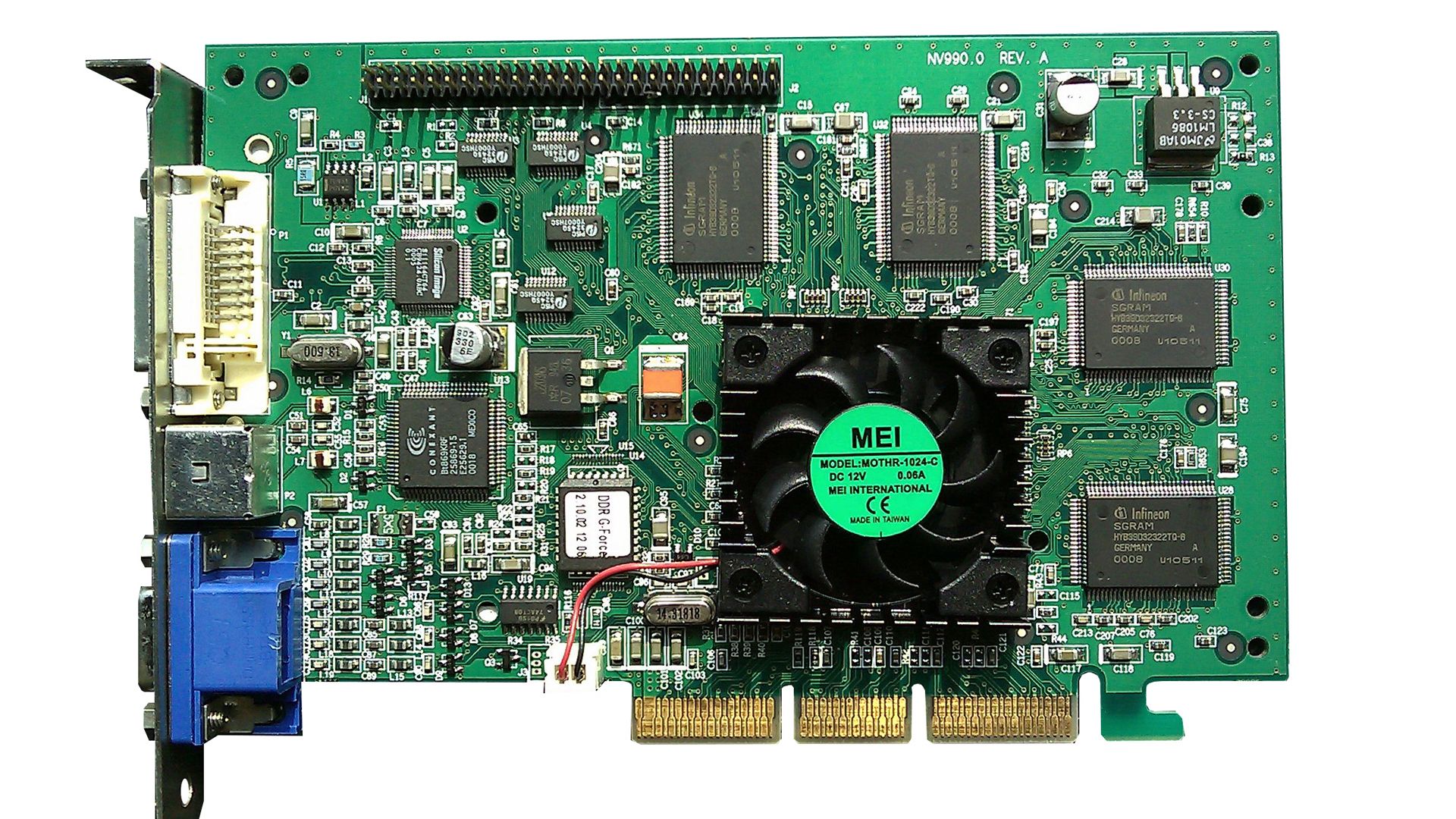

NVIDIA GeForce 256 (1999)

Enter NVIDIA with the GeForce 256. This was the first card to be marketed as a GPU, or "Graphics Processing Unit," emphasizing its ability to offload geometry calculations from the CPU. With hardware-transform, clipping, and lighting (T&L) features, the GeForce 256 was all about relieving the CPU and letting the graphics card handle the heavy lifting. This change in architecture was a major turning point and set the standard for future graphics cards.

But the GeForce 256 was more than just a liberator of the CPU; it was also a symbol of NVIDIA's commitment to continually pushing the boundaries of what was possible in graphics technology. Sporting 32MB of DDR memory---a considerable upgrade from the 4MB Voodoo---the GeForce 256 proved to be a powerhouse. It offered gamers a new level of performance, enabling more detailed environments and smoother framerates.

NVIDIA's proprietary Detonator Drivers were another key feature of the GeForce 256. These drivers improved card performance, stability, and compatibility, allowing the GeForce 256 to deliver a consistently high-quality gaming experience.

I never had the chance to own a GeForce 256, but I did own its predecessor, the Riva TNT2. Well, I had the budget TNT2 M64, but it sure felt like a "GPU" to me. Especially since it was all one cohesive card, but this was the card that coined the term, and had the under-the-hood features to justify that name.

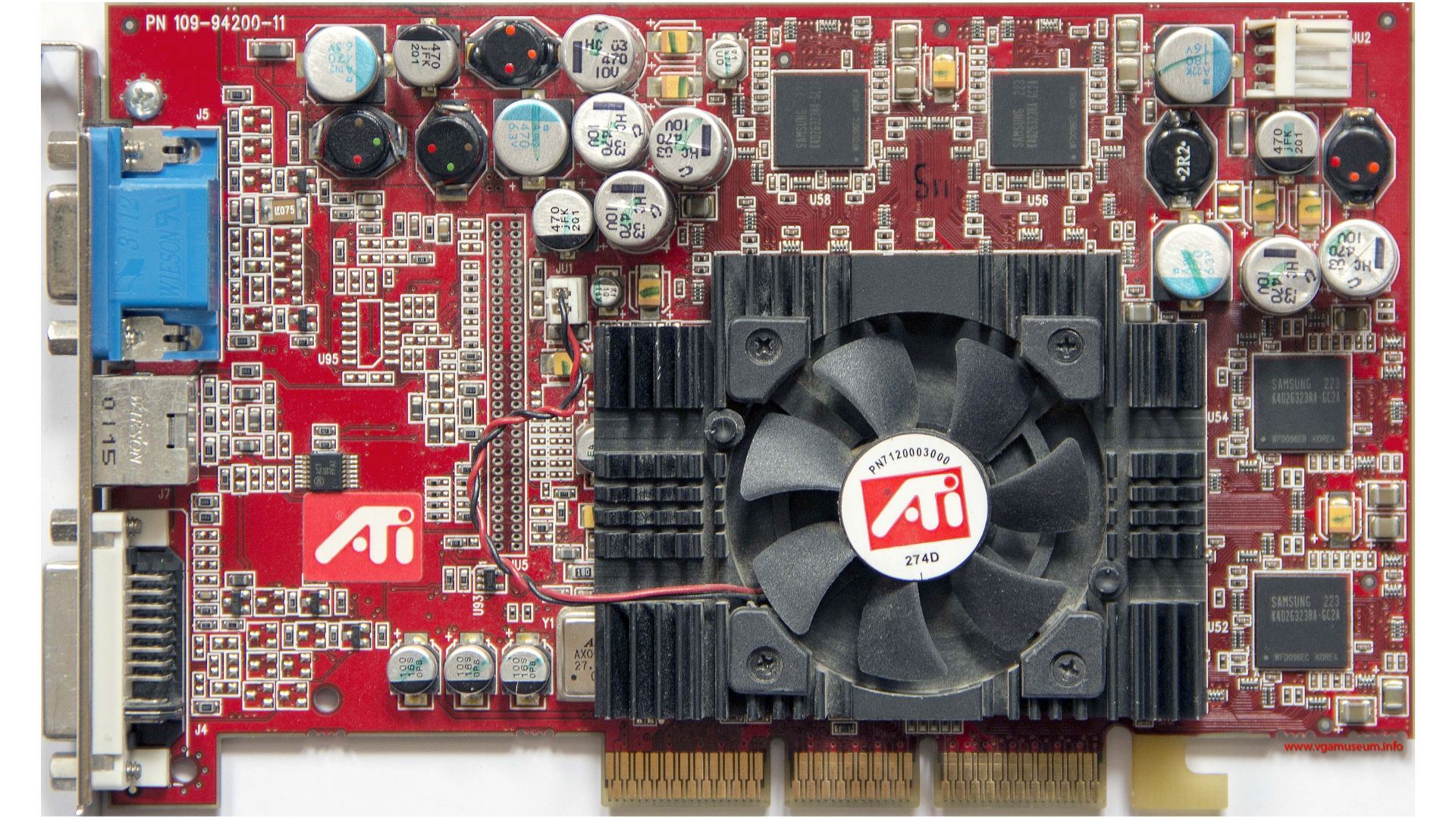

ATI Radeon 9700 Pro (2002)

When it comes to being ahead of its time, the ATI Radeon 9700 Pro takes the cake. As the first DirectX 9 card from ATI, competing with NVIDIA's DX9 cards like the FX 5800. However, unlike NVIDIA's infamous "dust buster" FX cards, the 9700 Pro was far quieter, cooler, and power-efficient.

The Radeon 9700 Pro symbolized ATI's intention to be a contender in the high-end graphics card market---a position it solidified over the years as it continued to innovate and compete with the likes of NVIDIA. The card's success helped establish ATI (and later, AMD) as a worthy competitor in the GPU market, setting the stage for a fierce rivalry that would drive innovation for years to come.

This era is also notable as the first (and last) time I fell for the "halo" card trap. The 9700 Pro was so hyped that I would do anything to have a little taste of it. Unfortunately, that taste was the Radeon 9200 SE, which was such an awful card that I traded it for the almost-but-not-quite as bad GeForce 5200 FX.

NVIDIA GeForce 6800 Ultra (2004)

NVIDIA swung back with the GeForce 6800 Ultra in 2004. This beastly (for the time) card was the first to support Shader Model 3.0 and High Dynamic Range (HDR) rendering. Additionally, the 6800 Ultra offered tremendous forward compatibility, ensuring that it could handle games and applications that emerged years after its release. Its robust feature set and longevity made it a favorite among enthusiasts.

Powering these features was the NV40 architecture, a technological marvel in its own right. This architecture housed 222 million transistors, more than twice the number found in the Radeon 9700 Pro. Additionally, the 6800 Ultra was outfitted with an impressive 16-pixel pipelines and 6 vertex shaders, ensuring that it had the raw power to handle the most demanding games of the time and future titles.

While the X800XT cards often beat the 6800 Ultra in pure performance, it was the promised future-proofing that made this card special. Whether this actually panned out for buyers is debatable, but what makes this so influential is that today you don't really expect to be locked out of new games on old GPU because of features, but rather a lack of performance. You can still play modern games on cards that are several generations old, frame rate aside.

The actual card I bought during this era was the GeForce 6600 AGP. I couldn't even afford the GT model, but having those new features supported meant that I could play Half-Life 2 and Doom 3, which was literally impossible on older cards that did not support the API features those games needed.

NVIDIA GeForce 8800 GT (2007)

The GeForce 8800 GT is a classic example of NVIDIA at its best. It introduced DirectX 10 support and came with the revolutionary unified shader architecture, which meant it could adjust how it allocated resources based on workload. This allowed for more efficient operation and better performance. For many, the 8800 GT represented the sweet spot in terms of price and performance, making high-end gaming more accessible.

The GeForce 8800 GT represented the pinnacle of NVIDIA's G80 architecture, leveraging its innovative unified shader model to great effect. Unlike previous designs where pixel shaders and vertex shaders were separate entities, the 8800 GT's 112 Stream Processors handled both types of shading. This resulted in more efficient processing and increased performance.

On a personal note, the 8800GT is the best graphics card I have ever bought. It changed the game completely. I had mistakenly bought the awful 8600 GT, thinking that, like before, this would be the mid-range king, but games like Oblivion and the first Witcher games were near unplayable. When I first saw the benchmarks for the 8800GT I could not believe the price, but after buying the cheapest model I could find, it was all true. Best of all, this card lasted throughout the entire PS3 and Xbox 360 generation, by being solidly ahead of those systems technologically and therefore smashing any multiplatform games that had PC ports. Good times!

ATI Radeon HD 4870 (2008)

What makes this card special is that it's the first ever card to hit and pass the one teraflop mark. To be precise, it clocked in at 1.2TF! These days we talk about GPUs in terms of how many teraflops they are, but reaching this level of computational was stepping on the toes of super-computing.

Constructed with the same RV770 architecture as the (equally awesome and more affordable) HD 4850, the 4870 distinguished itself by running at higher clock speeds, boasting an impressive 750 MHz. This, combined with its 800 stream processors, allowed the 4870 to surpass the performance of many of its competitors.

The Radeon HD 4870 was a trendsetter, ushering in the era of GDDR5 memory in graphics cards. GDDR5 offered double the data rate of GDDR3, translating into faster, smoother, and more detailed graphics.

While a teraflop is just an arbitrary number, just like breaking the 1Ghz barriers for CPUs, this had a psychological effect. It signaled we were in a new bracket of performance.

What's interesting is that gamers today are are still enjoying games that are more or less in this GPU performance class. Although they have much more texture memory, devices like the Nintendo Switch and Xbox One have similar raw performance, and plenty of people still use both.

NVIDIA GeForce GTX 970 (2014)

The GTX 970 is arguably one of the most popular graphics cards of the modern era. Offering high performance at an affordable price, it became the go-to GPU for a large portion of gamers. More than just its raw performance, it introduced features like Dynamic Super Resolution, making it possible for games to be rendered at higher resolutions and then downscaled for a sharper image.

Underneath its sleek exterior, the GeForce GTX 970 was brimming with ground-breaking technology for the time. The card was one of the first to feature NVIDIA's Maxwell architecture, which focused on delivering higher performance per watt than previous generations. This architectural choice made the GTX 970 a surprisingly efficient card, as it delivered performance that rivaled or even surpassed some high-end options from the previous generation while consuming significantly less power.

It's worth noting that controversy emerged around the GTX 970's memory configuration, as it was later revealed that the last 0.5GB of memory (of its 4GB allocation) operated much slower than the rest. Making this card equally infamous and iconic.

Just like the 8800 GT, this card provided so much bang for the buck compared to the flagship in its series, that it seemed like a no-brainer. Crucially, though, it was the significant improvement in power efficiency that seemed like the start of a trend, which really hit its stride with the next generation of cards from NVIDIA.

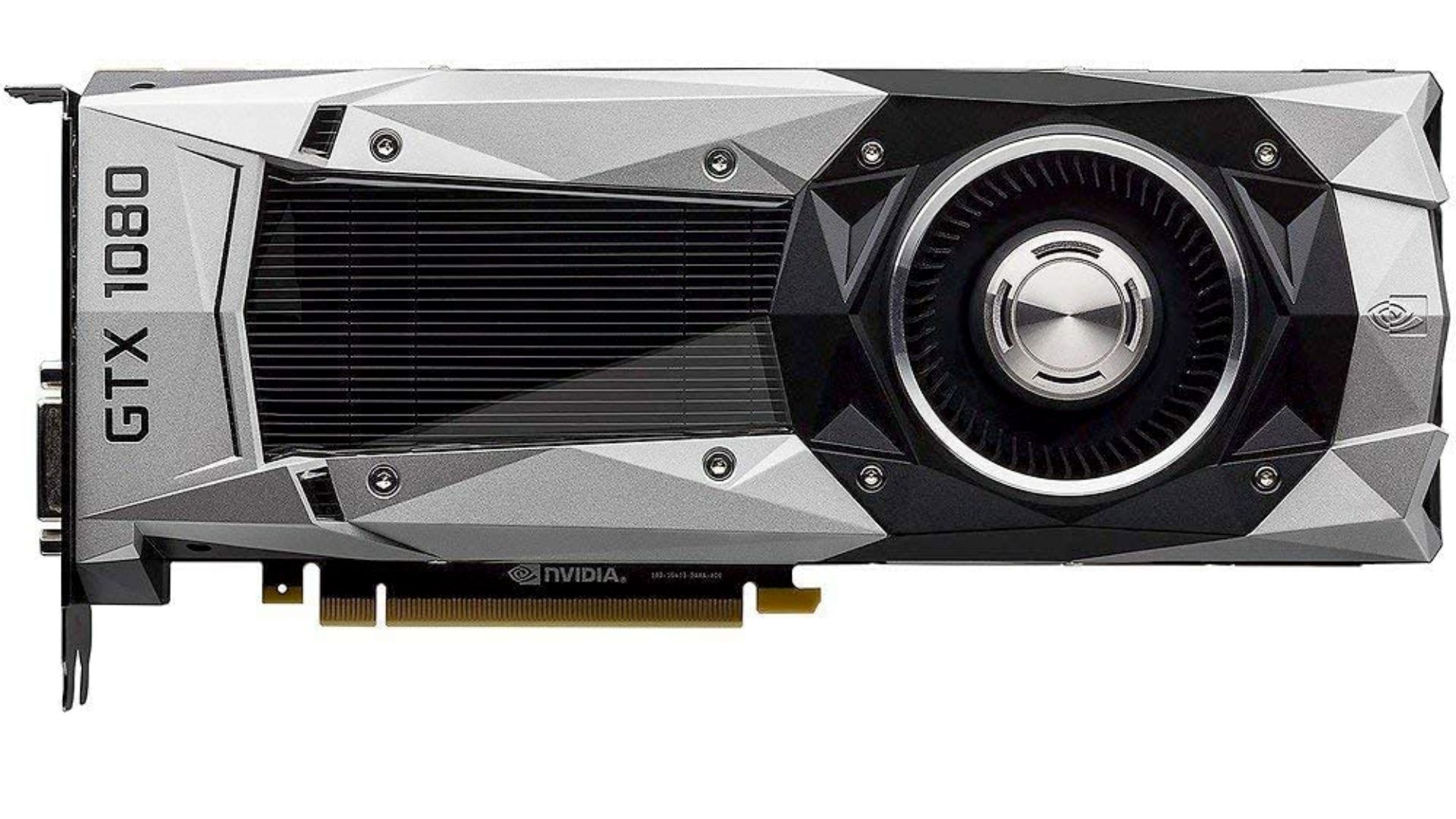

NVIDIA GeForce GTX 1080 (2016)

The GTX 1080 was the first to use the Pascal architecture, leading to significant improvements in power efficiency and performance. This card was also the first to break the 2 GHz barrier, bringing ultra-high-resolution gaming into the mainstream. Virtual Reality gaming, too, found a champion in the GTX 1080, as its performance levels offered the smooth experience VR demands.

The power efficiency leap with Pascal was so dramatic, that it caused a revolution in laptop graphics as well. No longer were laptop GPUs a generation or two behind. These were exactly the same GPUs you'd find in the desktop version, just clocked down.

Even today, the 1080 is still a totally viable GPU and you'll find plenty of gaming enthusiasts on various forums bragging about how much life they've gotten out of their beloved 1080s. As long as you're aiming for 1080p or 1440p resolutions, it will still run games with decent levels of detail and playable frame rates.

The 1080 showed that a flagship GPU didn't have to be a PSU-devouring beast to bring the performance heat, and it totally deserves its place on this list.

AMD Radeon RX Vega 64 (2017)

The Vega 64 from AMD marked a significant step forward for the company. With 12.66 teraflops of raw performance and a powerful new geometry engine, it brought stiff competition to the high-end market. This card represented AMD's commitment to high-end gaming and computational power, making it a crucial piece of the modern GPU landscape.

The Radeon Vega 64 was AMD's first consumer-level graphics card to use High Bandwidth Memory 2 (HBM2), a memory architecture offering a significantly smaller physical footprint and higher bandwidth than traditional GDDR5 and GDDR5X solutions. The Vega 64 came with 8GB of HBM2 memory, delivering incredible bandwidth for graphically intensive tasks.

While AMD seems to have abandoned HBM for the time being, this card shows its influence in how modern GPUs focus strongly on memory bandwidth, especially for cards targeting higher resolutions. It was also a shot across the bow by AMD to NVIDIA, showing that they were still willing to tackle the to high-end of the market, and experiment with technologies that were from left field, rather than playing it safe and incremental.

NVIDIA GeForce RTX 3090 (2020)

Rounding off our list of influential graphics cards, we arrive at the monolithic NVIDIA GeForce RTX 3090, released in 2020. As the flagship model in NVIDIA's RTX 3000 series, the RTX 3090 was a showcase of the latest and most advanced GPU technologies, setting a new benchmark for what was possible in PC gaming.

At the heart of the RTX 3090 was the GA102 chip, fabricated using a cutting-edge 8nm process. With a staggering 10,496 CUDA cores and a base clock speed of 1395 MHz, the RTX 3090 offered raw performance that was simply unmatched at the time of its release.

However, it was the RTX 3090's memory that truly set it apart from its contemporaries. The card boasted 24GB of ultra-fast GDDR6X memory, offering incredible bandwidth and more than enough capacity for even the most demanding games and applications.

What was interesting about the 3090, is that NVIDIA told gamers, with a wink and a nudge, that the 3090 was essentially this generation's Titan card. It wasn't meant for gaming, although it would blow away the "true" flagship RTX 3080 in any test, and ran the same game-ready drivers.

You could look at the 3090 in two ways - as an uber-expensive gaming card that didn't offer a good frames-per-dollar deal compared to the card right below it, or you could look at it as an extremely cheap workstation card. After all, with 24GB of VRAM and NVIDIA's AI and ray-tracing acceleration hardware, you could do far more with this card than play video games, and on the weekends you could experience the best performance in gaming.

I expect that the 3090 was the start of a trend, and as of 2023 the 4090 offers a very similar deal, which seems to appeal to both budget workstation professionals and high-budget gamers, since NVIDIA can't seem to keep these BFGPUs in stock.

Now with Intel getting serious about entering as a third competitor in the high-end discrete GPU market, I have no doubt that there are at least as many iconic graphics cards we'll get to enjoy over the coming decades, and I really can't wait to meet them!

Finally, a special thanks to the VGA Museum for the wonderful photos you see in most of the entries above, which they make available for use in articles. They have a huge collection of classic graphics card photos as well as data sheets and interesting facts on GPUs across history---and they do it all as a labor of love floated by donations. If you're feeling extra nostalgic about the GPUs of yesteryear, it's worth a visit!